Make Your 1st Streamlit App with Cohere’s Large Language Models

Make Your 1st Streamlit App with Cohere’s Large Language Models

Introduction

- Streamlit is a popular open-source framework for building web-based data visualization and machine learning tools.

- Cohere is a company that offers large language models trained on a wide range of data sources, including books, articles, and websites.

- In this tutorial, we will show you how to use Cohere’s large language models to build your first Streamlit app.

Set up your development environment

To get started, you will need to have Python 3 installed on your computer.Setup Python virtual environment:

python -m venv envNext, install the Streamlit and Cohere libraries using pip:

pip install streamlit

pip install cohere-pythonCohere

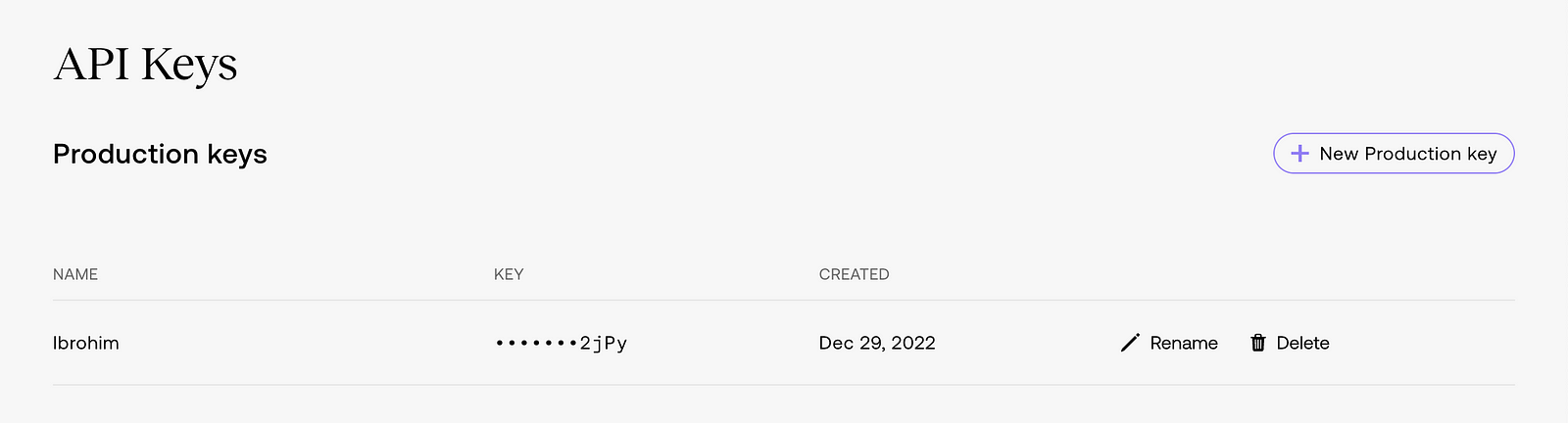

Choose a language model and obtain an API key

- Cohere offers a variety of language models, including models trained on general knowledge, scientific papers, and Wikipedia articles.

- To use a Cohere language model, you will need to obtain an API key from the Cohere website.

Streamlit

Write your Helper Class for Streamlit app

- Open a new file in Visual Studio code editor and import the Streamlit and Cohere libraries.

- Next, define a function that will use the Cohere API to generate text based on a prompt:

Go to your completion.py file import all necessary libraries and assign Cohere’s API key:

# Import from standard library

import os

import logging

from dotenv import load_dotenv

# Import from 3rd party libraries

import cohere

import streamlit as st

# Configure logger

logging.getLogger("complete").setLevel(logging.WARNING)

# Load environment variables

load_dotenv()

# Assign credentials from environment variable or streamlit secrets dict

co = cohere.Client(os.getenv("COHERE_API_KEY")) or st.secrets["COHERE_API_KEY"]Declare class named Completion:

# Declare Class Completion

class Completion:

def __init__(self, ):

passNow, add function which will be responsible for generating text based on a prompt:

def complete(prompt, max_tokens, temperature, stop_sequences):

"""

Call Cohere Completion with text prompt.

Args:

prompt: text prompt

max_tokens: max number of tokens to generate

temperature: temperature for generation

stop_sequences: list of sequences to stop generation

Return: predicted response text

"""

try:

response = co.generate(

model = 'xlarge',

prompt = prompt,

max_tokens = max_tokens,

temperature = temperature,

stop_sequences = stop_sequences)

return response.generations[0].text

except Exception as e:

logging.error(f"Cohere API error: {e}")

st.session_state.text_error = f"Cohere API error: {e}"Open a new file app.py.

In this file we will implement logic for Streamlit App.

Go to your app.py file import all necessary libraries:

# Import from standard library

import logging

# Import from 3rd party libraries

import streamlit as st

import streamlit.components.v1 as components

# Import modules

from completion import Completion

from supported_languages import SUPPORTED_LANGUAGES

from translation import Translator

# Configure logger

logging.basicConfig(format="\n%(asctime)s\n%(message)s", level=logging.INFO, force=True)Let’s Configure Front of our app.

- Use Streamlit’s widgets and markdown functions to create a user interface for your app.

Add following code to app.py:

# Configure Streamlit page and state

st.set_page_config(page_title="Co-Complete", page_icon="🍩")

# Store the initial value of widgets in session state

if "complete" not in st.session_state:

st.session_state.complete = ""

if "text_error" not in st.session_state:

st.session_state.text_error = ""

if "n_requests" not in st.session_state:

st.session_state.n_requests = 0

# Force responsive layout for columns also on mobile

st.write(

"""

<style>

[data-testid="column"] {

width: calc(50% - 1rem);

flex: 1 1 calc(50% - 1rem);

min-width: calc(50% - 1rem);

}

</style>

""",

unsafe_allow_html=True,

)

# Render Streamlit page

st.title("Complete Text")

st.markdown(

"This mini-app completes Sentences using Cohere's based [Model](https://docs.cohere.ai/) for texts."

)

st.markdown(

"You can find the code on [GitHub](https://github.com/abdibrokhim/CoComplete) and the author on [Twitter](https://twitter.com/abdibrokhim)."

)

# text

text = st.text_area(label="Enter text", placeholder="Example: I want to play")

# max tokens

max_tokens = st.slider('Pick max tokens', 0, 1024)

# temperature

temperature = st.slider('Pick a temperature', 0.0, 1.0)

# stop sequences

stop_sequences = st.text_input(label="Enter stop sequences", placeholder="Example: --")

# complete button

st.button(

label="Complete",

key="generate",

help="Press to Complete text",

type="primary",

on_click=complete,

args=(text, max_tokens, temperature, stop_sequences),

)Now, define a new function which will call our complete function form Completion class:

# Define functions for text completion

def complete(text, max_tokens, temperature, stop_sequences):

"""

Complete Text.

"""

if st.session_state.n_requests >= 5:

st.session_state.text_error = "Too many requests. Please wait a few seconds before completing another Text."

logging.info(f"Session request limit reached: {st.session_state.n_requests}")

st.session_state.n_requests = 1

return

st.session_state.complete = ""

st.session_state.text_error = ""

st.session_state.n_requests = 0

if not text:

st.session_state.text_error = "Please enter a text to complete."

return

with text_spinner_placeholder:

with st.spinner("Please wait while your text is being completed..."):

compeletion = Completion()

completed_text = compeletion.complete(translated_text, max_tokens, temperature, [stop_sequences])

st.session_state.text_error = ""

st.session_state.n_requests += 1

st.session_state.complete = (completed_text)

logging.info(

f"""

Info:

Text: {text}

Max tokens: {max_tokens}

Temperature: {temperature}

Stop_sequences: {stop_sequences}\n

"""

)continue…

Let’s make our Streamlit app multilingual

Simply create new file named translation.py and copy/paste following code:

# Import from standard library

import logging

# Import from 3rd party libraries

import streamlit as st

from deep_translator import GoogleTranslator

class Translator:

def __init__(self, ):

pass

@staticmethod

def translate(prompt, target):

"""

Call Google Translator with text prompt.

Args:

prompt: text prompt

target: target language

Return: translated text

"""

try:

translator = GoogleTranslator(source='auto', target=target)

t = translator.translate(prompt)

return t

except Exception as e:

logging.error(f"Google Translator API error: {e}")

st.session_state.text_error = f"Google Translator API error: {e}"Now, go again to app.py and update our function:

# Define functions for text completion

def complete(text, max_tokens, temperature, stop_sequences, from_lang="", to_lang=""):

"""

Complete Text.

"""

if st.session_state.n_requests >= 5:

st.session_state.text_error = "Too many requests. Please wait a few seconds before completing another Text."

logging.info(f"Session request limit reached: {st.session_state.n_requests}")

st.session_state.n_requests = 1

return

st.session_state.complete = ""

st.session_state.text_error = ""

# st.session_state.visibility = ""

# st.session_state.n_requests = 0

if not text:

st.session_state.text_error = "Please enter a text to complete."

return

with text_spinner_placeholder:

with st.spinner("Please wait while your text is being completed..."):

translation = Translator()

translated_text = translation.translate(text, "en")

compeletion = Completion()

completed_text = compeletion.complete(translated_text, max_tokens, temperature, [stop_sequences])

to_lang_code = SUPPORTED_LANGUAGES[to_lang]

result = translation.translate(completed_text, to_lang_code)

st.session_state.text_error = ""

st.session_state.n_requests += 1

st.session_state.complete = (result)

logging.info(

f"""

Info:

Text: {text}

Max tokens: {max_tokens}

Temperature: {temperature}

Stop_sequences: {stop_sequences}\n

From: {from_lang}

To: {to_lang}

"""

)Do not forget to add options for choosing language from - to.

# from to selector

col1, col2 = st.columns(2)

# from " " language

with col1:

from_lang = st.selectbox(

"From language",

([i for i in SUPPORTED_LANGUAGES]),

label_visibility=st.session_state.visibility,

)

# to " " language

with col2:

to_lang = st.selectbox(

"To language",

([i for i in SUPPORTED_LANGUAGES]),

label_visibility=st.session_state.visibility,

)

# complete button

st.button(

label="Complete",

key="generate",

help="Press to Complete text",

type="primary",

on_click=complete,

args=(text, max_tokens, temperature, stop_sequences, from_lang, to_lang),

)Get supported languages here, and include it in supported_languages.py

Now, run the Streamlit app using the following command:

streamlit run app.pyYour app should now be available at http://localhost:8501 in your web browser.

Conclusion

- In this tutorial, we showed you how to use Cohere’s large language models to build a Streamlit app for generating text.

- With a few lines of code, you can create a powerful tool for generating content, ideas, or prompts for creative writing or other applications.

Support for daily high quality articles:

Leave comment and share!.

Full code available on Github, give star if you like it.

Consider following on Medium

Consider following on Blogger

Tweet on Twitter

Want to collaborate/work with me?

Message me:

Learn programming for free

Do you have any questions or suggestions?

Message me:

Comments

Post a Comment